Build/Test a sentiment model

Once you have created a sentiment model and its entries you can test it with any of the services that support sentiment analysis. The Build action is a way to ensure that the most recent version of the model is the one used by any of those services.

Currently, there are two services that support sentiment models:

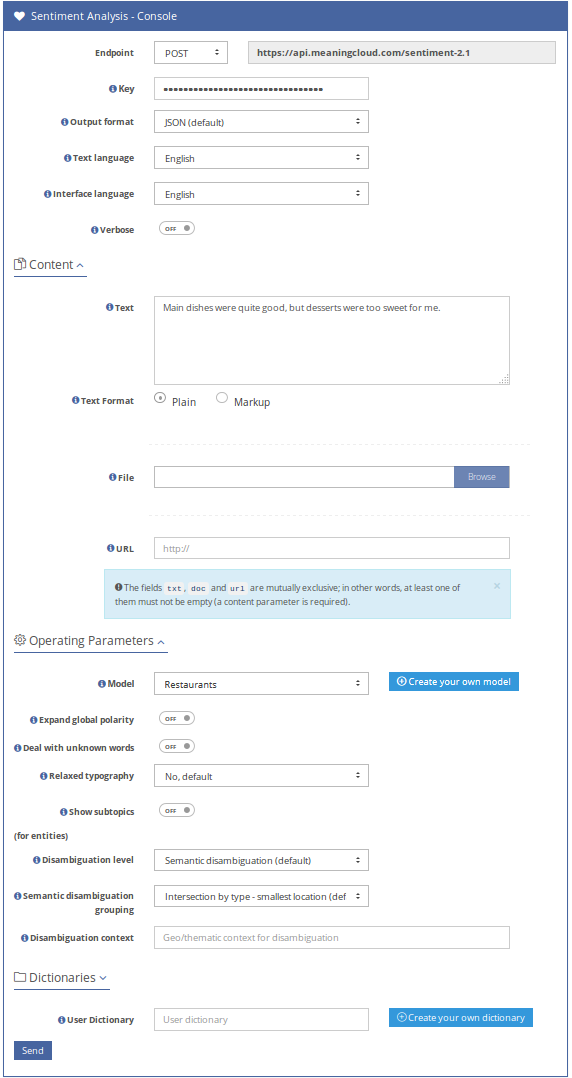

By default, sentiment models are tested using the Sentiment Analysis API. When you click on the Test button either in the sentiment models dashboard, in the sidebar of the different model views or in the build page, you will access the Sentiment Analysis Test Console with your license key and your sentiment model already selected.

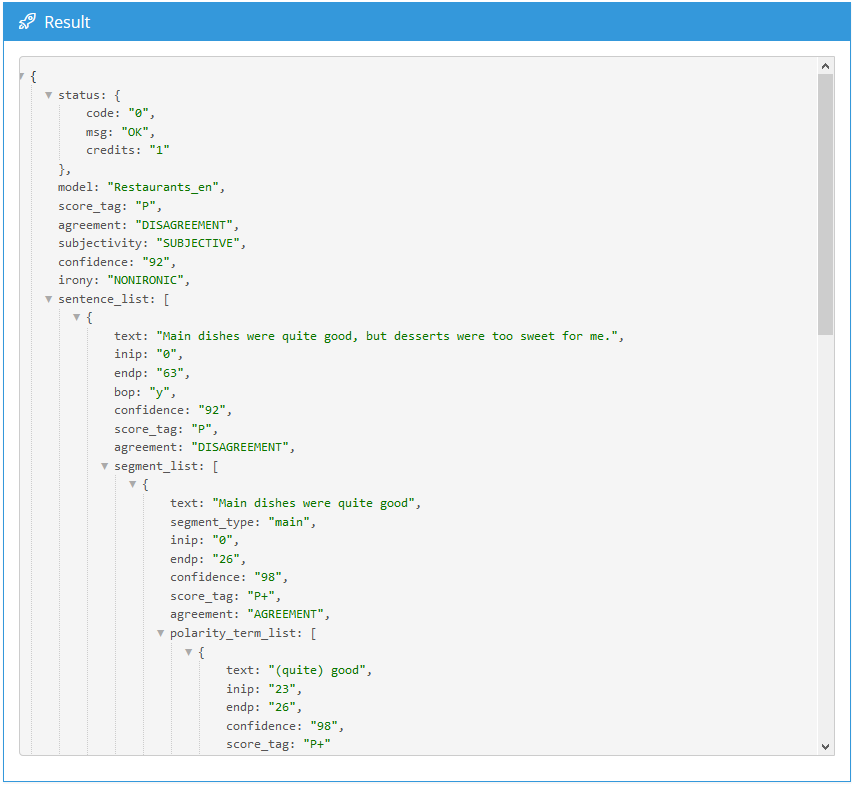

In the image above, we are testing our example sentiment model with a restaurant review. These are the results:

In the results you will be able to see the defined terms of your own model and how they interact with the entities and concepts detected in the text. The sentiment analysis is included at different levels in the text:

- Aggregated sentiment of the whole text.

- Sentiment detected at sentence level.

- Sentiment detected in sentence divisions, at segment level.

There are three important fields in each of these analyses, the confidence of the analysis, a score_tag showing the overall sentiment of the analyzed text and the agreement between the different parts of the analyzed text.

At the segment level, a list is included with information about polarity_terms included in your model, that is the entries and subentries you have defined. Inside this list, you will have a sentimented_entity_list (or sentimented_concept_list) when one of the previous terms affect to an entity or concept detected in the text.

Important

Are you seeing any term not defined in your model as an entry/subentry included in a polarity_term_list? That's beacause your model inherits from MeaningCloud's generic domain model. If you want one of these terms not to be shown in your analyses, you should define it as an entry in your model with the dismiss base model entries field enabled, as explained in the Advanced settings page.