Emotions govern our daily lives; they are a big part of the human experience, and inevitably they affect our decision-making. We tend to repeat actions that make us feel happy, but we avoid those that make us angry or sad.

Information spreads quickly via the Internet — a big part of it as text — and as we know, emotions tend to intensify if left undealt with.

Thanks to natural language processing, this subjective information can be extracted from written sources such as reviews, recommendations, publications on social media, transcribed conversations, etc., allowing us to understand the emotions expressed by the author of the text and therefore act accordingly.

But… What’s an emotion?

If we go to psychological theory, an emotion “is a complex psychological state that involves three distinct components: a subjective experience, a physiological response, and a behavioral or expressive response”. From this definition, we can take away two ideas:

- Subjectivity & complexity: we do not all react the same way to similar situations. What may offend one person may seem funny to another. Besides this, we can experience a mix of emotions at any one time, and it is not easy to understand how we feel ourselves, let alone how another person feels.

- Response: when you feel emotions, there’s a physiological response associated (sweating, increased heart rate, or breathing…) as well as gestures and expressions like smiling or crossing your arms.

In addition to understanding exactly what emotions are, many theories have proposed separating them into different types. Among the most significant studies, we can highlight the following:

- Paul Ekman is a pioneer in the study of emotions and their relation to facial expressions. He defined as basic emotions: fear, disgust, anger, surprise, happiness, and sadness. Later, he proposed an expanded list.

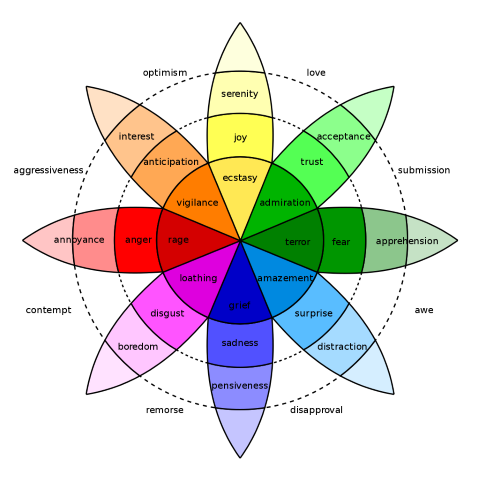

- Robert Plutchik proposed a psycho-evolutionary classification approach for general emotional responses. Plutchik drew the famous “wheel of emotions” to explain his proposal in a graphic way, which consisted of the eight basic bipolar emotions: joy vs. sadness, trust vs. disgust, anger vs. fear, and surprise vs. anticipation.

- Parrot identified over 100 emotions based on physiological response and conceptualized them as a tree-structured list in 2001. Parrot defined these primary emotions: love, joy, surprise, anger, sadness, and fear.

- Lövheim proposed a direct relation between specific combinations of the levels of the signal substances dopamine, noradrenaline, and serotonin and eight basic emotions. The eight basic emotions were represented in the corners of a cube. They were anger, interest, distress, surprise, fear, joy, shame, and disgust.

But how does all of this apply to a more practical scenario? Let’s take Voice of Customer analysis as an example. Often, we decide to buy products based on both our previous experiences and the recommendations of others. People will recommend a specific product if they are happy with the product/brand/service, but they will also move heaven and earth to complain if they’re angry.

In this second scenario, with the speed at which news can fly nowadays via social media, if an angry customer is someone with a large enough audience — or even someone without a big audience but with a complaint that gains momentum — it could end up being a serious PR problem for the company.

Emotion Recognition

Emotion Recognition is the process of identifying human emotion from both facial and verbal expressions. As we have seen, to detect emotion in text, NLP techniques, machine learning, and computational linguistics are used.

If Sentiment Analysis is already a challenge due to the subjectivity of language and phenomena such as irony or sarcasm, emotion recognition takes it one step further: it tries to provide an in-depth understanding of a person’s actions.

But many times, Emotion Recognition and Sentiment Analysis are confused with one another, so let’s dig in a little. Sentiment Analysis generally obtains a polarity from the text, if the sentiment expressed in it is positive, negative, or neutral. Even though this can be extremely useful, it does not go into the underlying reasons for the sentiment output. This extra analysis is what we try to detect with Emotion Recognition.

Let’s get back to the Voice of the Customer scenario. On the one hand, aspect-based Sentiment Analysis is instrumental. It lets us know how people talk about the product and its different features, draw statistics, and make decisions to highlight the strengths and improve the weaknesses.

On the other hand, with emotion recognition, it will be possible to identify a person’s profile and act accordingly, thus making it possible to empathize with the client.

For example, “This product is not friendly” and “I hate this product, it’s the worst 😡” are both negative sentiments, but emotionally they are very different. In both cases, it can be a good point of action to improve their opinions with direct contact (one-to-one marketing) but should be handled by marketers and client support teams in distinct ways. In dealing with angry customers, there are even established procedures, typically involving the use of gentle language and trying to calm the client.

Use Cases

As you can already guess from what we have discussed, identifying emotions can be beneficial in multiple scenarios. Let’s see some of them:

- Social media analysis. Thanks to this valuable information, you will be able to define alerts to avoid a reputational crisis or define priorities for action to provide insights and improve user experience.

- Customer Experience improvement. Quickly identifying customer needs and complaints in your feedback is key to preserve a company’s competitiveness.

- Measure the happiness of your employees. Discover internal problems early and avoid talent leaks. Benefit from better performance which is associated with happier employees.

- Integration with chatbots. Build believable dialogs by detecting customer feelings. Chatbots are more than just machines. It is a growing field where strategic personalities are expected. Thus, it becomes fundamental that they can adapt to the conversation and empathize.

- Improvement in marketing tasks and support conversations. Thanks to emotion analysis, you will be able to answer clients more appropriately and improve your customer relationship management.

- Call center performance monitoring. Monitor the conversations provided by your agents to improve communication and the service given in your call centers.

We have just released MeaningCloud’s Emotion Recognition Pack, where you can detect emotions explicitly expressed in all types of unstructured content. There are two ways of gaining access to the Emotion Recognition Vertical Pack:

- Requesting the 30 days free trial period that we offer for all our packs.

- Subscribing to the pack you are interested in, in this case, the Emotion Recognition.

For any questions, we are available at support@meaningcloud.com.

Continue reading the second part of this post, explaining how we approach Emotion Recognition and how it compares to other approaches.