One of the most common and extensively studied knowledge extraction task is text categorization. Frequently customers ask how we evaluate the quality of the output of our categorization models, especially in scenarios where each document may belong to several categories.

One of the most common and extensively studied knowledge extraction task is text categorization. Frequently customers ask how we evaluate the quality of the output of our categorization models, especially in scenarios where each document may belong to several categories.

The idea is to be able to keep track of changes in the continuous improvement cycle of models and know if those changes have been for good or bad, to commit or reject them.

This post gives answer to this question describing the metrics that we commonly adopt for model quality assessment, depending on the categorization scenario that we are facing.

Single-label vs Multi-label categorization problems

In some scenarios, data samples are associated with just a single category, also named class or label, which may have two or more possible values. When there are two values, it is called binary categorization, otherwise is a multi-class categorization. For instance, an email message may be spam or not (binary) or the weather may be sunny, overcast, rainy or snow. Formally, this is called a single-label categorization problem, where the task is to associate each text with a single label from a set of disjoint labels L, where |L|>1 (|L| means the size of the label set, |L|=2 for the binary case).

However, in real world often it is not sufficient to talk about a text belonging to a single category. Instead, based on the granularity and coverage of the set of labels, a text intrinsically needs to be assigned with more than one category, for instance, a newspaper article about the climate change may be associated with environment and also with pollution, politics, etc. This scenario is called multi-label categorization, and the task is to associate each text with a subset of categories Y from a set of disjoint labels L where Y⊆L (Y is a subset of L).

Metrics for single-label categorization problems

In single-label scenarios, the usual metrics are calculated using the confusion matrix, which is a specific table layout that makes it very easy to see if the classifier is returning the correct label or not and which labels the system is misclassifying.

The confusion matrix allows to calculate the number of true positives (TP, correctly returned labels), false positives (FP, the classifier returns a label that is incorrect), true negatives (TN, correctly non-returned labels) and false negatives (FN, the classifier does not return a label which should have returned).

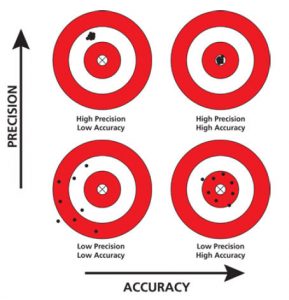

There are many metrics based on these figures, but the most common are precision and recall (also called sensitivity):

precision = TP / (TP + FP) -> “how many of the returned labels are correct” recall = TP / (TP + FN) -> “how many of the labels that should have been returned are actually returned”

There is an inverse relationship between precision and recall, and typically when quality (precision) is increased, quantity (recall) decreases, and the other way round. To find a balance between them, there is the combined metric F1, the harmonic mean of precision and recall:

F1 = 2*precision*recall / (precision + recall) -> “a balance between the quantity and the quality of labels”

Accuracy is another combined metric, which also takes TN into account, and measures if the classifier returns exactly what it is supposed to return:

accuracy= (TP + TN) / (TP + TN + FP + FN) -> “the classifier does what it is supposed to do”

There is an additional consideration for the case of multi-class categorization. If calculations of true/false positives/negatives are done directly, globally, independently of the label, they are called macro-averaged metrics. If calculations are first done for each individual category and then averaged, they are called micro-averaged metrics. Macro-averages give an idea of the global performance of the system, and micro-averages focus on individual categories and are more appropriate if classes are very unbalanced.

Metrics for multi-label categorization problems

However, in multi-label problems, predictions for an instance is a set of labels, and therefore, the concept of fully correct vs partially correct solution can be considered. In addition, apart from evaluating the quality of the categorization into classes, we could also evaluate if the classes are correctly ranked by relevance.

Focusing on the quality, the evaluation is a measurement of how far the predictions are from the actual labels, tested on unseen data.

Two very basic metrics are the label cardinality, i.e., the average number of labels per example in the set, and the label density, i.e., the number of labels per sample divided by the total number of labels, averaged over the samples.

If partially correct predictions are ignored (and consider them as incorrect), the accuracy used in single-label scenarios can be extended for multi-label prediction. This is called exact match ratio or subset accuracy, thus the precision of the system when the set of predicted labels exactly matches the true set of labels.

As this is a harsh metric, to capture the notion of partially correct, we need to evaluate the difference between the predicted labels (S) and the true labels (T). Shantanu Godbole and Sunita Sarawagi, in their paper “Discriminative methods for multi-labeled classification“, published in Advances in Knowledge Discovery and Data Mining (Springer Berlin Heidelberg, 2004, pp 22-30), proposed the label-based accuracy (LBA), which symmetrically measures how close S is to T, and it is nowadays the most popular multi-label accuracy measure:

LBA = |T ∩ S| / |T U S| “how many labels are correct among all returned labels”

LBA is in fact a combined metric of precision and recall, as it takes into account both FP (categories in S that should not be assigned) but also FN (categories missing in S). LBA is similar to the Jaccard index, used in other scenarios.

The Hamming Loss corresponds to the LBA taking into account FP and FN predictions, and can be calculated based on the symmetrical difference (logical XOR) between S and T.

Last, in this scenario, precision and recall can also be redefined to be calculated in terms of T and S:

precision = |T ∩ S| / |S| recall = |T ∩ S| / |T|

Conclusion

To sum up, in single-label problems, our usual metrics are precision, recall and F1, micro- or macro-averaged depending on the categories, and, for multi-label problems, LBA is our choice, along with exact match in the case we want to be very strict with the predictions.

These metrics are easy (and fast) to calculate and allow us to compare the performance of different classifiers.