The contact center is a crucial component of the customer experience and increasingly incorporates more channels based on unstructured information. In this post we analyze how advanced semantic analysis can be used to get the most out of the contact center of the future.

The Rise of the New Contact Center

Interest in the contact center has multiplied by its greater role as an essential component of the customer experience. New interaction channels (bots, chats, social) add to the traditional email and telephone and enable innovative ways to connect with clients in both inbound and outbound contact centers, both internal to companies of all types and in those operated by BPO vendors to provide outsourced services.

In this way, the contact center (traditionally known as call center) has ceased to be a cost center to become a tool for proactively communicating with and understanding the market, for multichannel business development and for generating value for the company.

But this profusion of channels has multiplied the volume, variety and velocity of unstructured information (voice, email, social, chat, bots) that the center must handle -an inherently big data problem- and the difficulty of integrating it, managing it and leveraging it better.

The strategic management of the contact center cannot be based solely on structured data on availability, occupation, response times or numerical scores granted by customers. The analysis of the unstructured content of the interactions provides us with very valuable information about the needs and satisfaction levels of the clients and about the quality and efficiency of the operation.

Text Analytics in the Contact Center

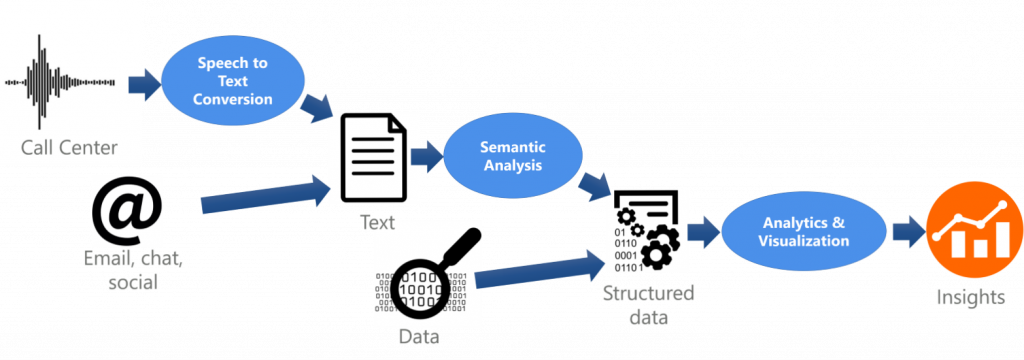

Text analytics is what enables us to turn the content in all these interactions into actionable information. In many cases this content is inherently textual (e.g., email, social, chat); in others, (e.g., telephone) must be converted into text for analysis.

Once converted and integrated, these textual data undergo treatments such as information extraction, theme classification, sentiment, emotion and intention analysis, and others with two main goals:

- Deep customer understanding: identification of needs, problems, attitudes, opinions, drivers of satisfaction or dissatisfaction or perception of our brand.

- Contact center management and optimization: from real-time supervision and support of interactions to better serve them, to control of adherence to procedures and regulatory compliance or discovery of good practices.

These goals can be achieved through two levels of aggregation and analysis:

- Real-time analysis of each individual interaction or call, to monitor the conversation and enable early detection of eventual warnings and issues that may affect the relationship with the customer or the quality of the contact center service, or, to provide real-time conversational guidance in the form of suggestions to the agent to improve the engagement with the customer and the quality of the interaction.

- Aggregated, offline analysis of an interaction repository, for segmenting and detecting trends in customers’ opinions and behaviors, and for assessing quality (adherence, compliance) and building predictive models to discover factors correlated with a successful operation.

The Role of Speech Analytics

One of the fields that we have focused on the last year is Speech Analytics. Whereas this field is not actually new, the popularization of contact centers in the last few years due to very low entry barriers and great cost reductions has led to a growing demand for related technologies.

The starting point is a corpus or set of call recordings provided by our customer, or, more often, call transcripts. Each project is different in call length, quality of the transcripts, languages, domains (banking, telco, insurance, retail, health, travel, hospitality, etc.), inbound or outbound contact centers, etc.

If call recordings are provided, the first step is to run speech to text to get the transcripts. We use third party solutions, mainly Google Speech To Text, Microsoft Speech To Text API or IBM Watson Speech To Text, each with their pros and cons. Speaker diarization, i.e., splitting the voices of each speaker, is highly desirable, specially for identifying the voices of the agent and the customer, to allow for individualized analysis.

Accurate transcripts achieve better results than lower quality ones in terms of precision and coverage. However, from our point of view, the information loss is limited and probably, in certain scenarios, low quality transcripts could be used instead of the accurate ones. For instance, in real-time analysis for conversation guidance where the “human effect” can filter out system misdetections or misclassifications (ignoring them).

The next step depends on the scenario, but typically requests to one or several of our APIs and services are made, sometimes using some existing language models but also some specific models developed for each occasion; then results are combined and aggregated to extract valuable insights that built up the final global result.

Technically, each transcript is first parsed to extract the information as a list of utterances. Each utterance contains:

- the identification of speaker (AGENT or CUSTOMER). If no specific identification is provided, we have classifiers to infer this with the text and type of contact center inbound or outbound.

- the starting time (in relation to the beginning of the call)

- the voice transcript

Then, each call is processed using our semantic technology, first utterance by utterance and then aggregating the information.

Text Analytics for Understanding Customers

Interactions in the multi-channel contact center are an inexhaustible source of information about our customers: unmet needs, desired product features, problems of use, intentions, attitudes, opinions, causes of satisfaction or dissatisfaction, perception about our brand, preferences, comparatives regarding competition, and others.

The use of text analytics to understand customers is an issue that has been dealt with profusely in this blog, for example. here and here.

Text Analytics for Managing and Optimizing the Contact Center

The deep analysis of interactions can be used in the management and optimization of contact center operations, both from the perspective of real-time understanding of individual conversations and in their offline aggregate analysis. Our customers are developing applications in both real-time scenarios and in offline analysis (after the call).

Real-time Analysis

In this scenario there are applications focused on the agent:

- Provide him/her with conversational guidance to improve the quality of service. For instance, if no sign of empathy or politeness is detected in a certain period of time, raise a “Lack of empathy” alert.

- Dynamically display a conversation checklist guide to the agent depending on the topic of the call (for instance, if the customer is calling to complain or to ask a change in the service), or additional information to improve the efficiency of his/her process (the emails or phone numbers of another department, if the user may need them).

There are also applications aimed at the agent supervisor or the company:

- Detect when the call is going out of control, for instance, using inappropriate language, strongly negative opinions, harmful emotions (anger or wrath) or intents (end with service, return product, generate negative recommendations…), or the existence of disputes, disagreements, reproaches or contradictions between the customer and the agent.

- Detect when the call is deviating from the marked objectives: inadequate topics, mentions of competitors, out-of-target products, frequent requests for clarification, etc.

- Check adherence to procedures and regulations, e.g., script compliance (salutation, introduction, product positioning, complementary information, closing) in an outbound call.

- Sale validation: in highly regulated markets, checking that all necessary information flows and operational conditions have taken place so as to consider a sale as legitimate.

Post Analysis

Examples of applications are:

- Develop a Speech Analytics Dashboard including top reasons for calling, mentions of products or services, competitors, quality assessment, etc., to generate metrics that are useful for the service holder and to assess the evolution of sentiment, empathy, emotion, or intent.

- Generate predictive models for successful calls, i.e., to detect behaviors and situations that commonly occur in calls that are marked as successful, based on mentioned topics, subjects (sell, service, etc.), evolution of polarity, emotion or intent, summary or word cloud of the call, etc.

Analysis Dimensions and their Implementation

Customer scenarios include one or several of the following dimensions of analysis, all of them supported by standard MeaningCloud products.

Behavioral Signals

Specific models aimed to detect different behavioral signals during the call such as empathy or politeness (or lack of them), expressions of satisfaction or dissatisfaction, possible issues (need of support, lack of information, accessibility or effectiveness of the service, etc.) or speech alerts (signs of irritation, concern, impatience or lack of understanding).

| Label | Description | Main producer |

|---|---|---|

| Empathy | Sign of empathy | Agent/Customer |

| Politeness | Sign of politeness | Agent |

| Satisfaction | Explicit sign of satisfaction | Customer |

| Dissatisfaction | Explicit sign of dissatisfaction | Customer |

| Issue>Generic | Generic issue (not in any of the other categories) | Customer |

| Issue>Support | Need of technical support, or notification of a problem | Customer |

| Issue>LackOfInformation | Complaint about the lack of information | Customer |

| Issue>LackOfAccessibility | Complaint about the accessibility to the service or communication between company and customer | Customer |

| Issue>LackOfEffectiveness | Complaint about the lack of effectiveness, efficiency or commitment of the company | Customer |

| Issue>SlowProcess | Complaint about a slow or tedious process | Customer |

| Issue>UnreliableProcess | Untrustworthy product/service | Customer |

| SpeechAlert>NotUnderstand | Explicit sign of not understanding | Agent/Customer |

| SpeechAlert>Concerned | Explicit sign of concern | Customer |

| SpeechAlert>Angry | Explicit sign of irritation | Customer |

Voice of the Customer

Call tagging using MeaningCloud standard Voice of the Customer models. There are several models available depending on the call domain: Banking/financial sector, insurance, telco, retail, and a generic model (for the rest of domains). All models share three common dimensions: Channel, Customer Service and Quality. Moreover, they also include dimensions specifically for each domain, most often, Company, Product/Service, Condition and Operation.

| Label | Description |

|---|---|

| CustomerService>AccessibilityCommunication | Accessibility & communication |

| CustomerService>Advertising | Advertising |

| CustomerService>Advice | Advice |

| CustomerService>CommercialPressure | Commercial pressure |

| CustomerService>Commitment | Commitment |

| CustomerService>IncidentManagement | Incident management |

| CustomerService>Information | Information |

| CustomerService>Service | Service |

| CustomerService>SupportMaintenance | Support and maintenance |

| CustomerService>TrainingKnowledge | Training and knowledge |

| CustomerService>Treatment | Customer treatment |

Intention Analysis

These models try to guess the reason, i.e., the root cause of the call and the stage in a customer journey, and are supported by our Intention Analysis Vertical Pack.

Sentiment Polarity

Our Sentiment Analysis API analysis identifies the polarity of both each utterance and the complete call. The output is one of the following tags: N+ (strongly negative), N (negative), NEU (neutral sentiment, neither good nor bad, or the case when positive polarities compensate negative ones), P (positive), P+ (strongly positive) and NONE (no sentiment).

Emotion Recognition

We use Plutchik’s Wheel of Emotions, adapted to the spontaneous user interaction typical in contact center conversations, supported by our Emotion Recognition Vertical Pack.

Call Summary Extraction

We have a specifically designed summarization API that tries to generate an extractive summary selecting the top most relevant sentences in the transcript that may give a quick idea of the interaction. The summary is obtained after the interaction, using the complete text.

Deployment

This functionality can be easily deployed as a private REST API, provided in Software as a Service model or installed on-premises, making requests to MeaningCloud’s Text Analytics solution, which also includes console for resource customization (model creation and editing).

Our professional team can effectively address Speech projects in any complex scenario with the maximum guarantees of success. If you would like more information about this area, please do not hesitate to contact us. We will be glad to help you!